Shipping a Clinical-Grade Patient Education Agent: Why Observability is Non-Negotiable in Healthcare AI

How we built an AI agent to help patients understand their health test results, and why LangGraph's explicit control flow and LangSmith's audit trails made it shippable in a regulated environment.

Short introduction

An AI that explains medical test results without verification is not a feature - it's a liability. And unlike a buggy chatbot that costs you leads, a healthcare AI that hallucinates can harm patients.

Following our recent post on shipping an AI SDR chatbot, we'd like to share one more production AI deployment case (this time in a higher-stakes domain): healthcare. Where the SDR agent touched revenue operations, this patient education agent touches clinical safety. The observability and control flow lessons from LangSmith and LangGraph became even more critical when mistakes could harm patients, not just lose deals.

We're shipping to production a system for a digital health platform that turns smartphone cameras into health monitors, measuring 30+ vital signs from face scans. In this case, patients needed conversational help understanding those results.

Healthcare AI is reaching mainstream adoption. OpenAI recently launched ChatGPT Health with medical record integrations for 230 million weekly users. But in healthcare, a "small" mistake isn't just a UX problem: it's a trust, compliance, and safety problem. The difference between a healthcare demo and a shippable system wasn't "better prompts or model", it was explicit control flow and audit-grade observability.

Problem

The system needed to do several things:

- Interpret dermatology test results from camera-based health scans

- Provide plain-language summaries patients could understand

- Answer follow-up questions conversationally

- Deliver tailored educational content based on findings

- Guide patients toward next steps (self-care, book appointment)

Why this is harder than a typical chatbot:

- Clinical accuracy is non-negotiable. Hallucinated information can harm patients. Every answer must be grounded in approved medical knowledge, not model training data.

- Regulatory audit trails matter. Must track what was said and why for compliance. HIPAA and medical device regulations require immutable logs and traceability.

- Uncertainty handling is critical. The system must know when to route to a human clinician rather than attempting an answer that could be unsafe.

- Education vs. diagnosis boundary exists. The agent can't cross into clinical decision-making. It's educational support only, and that distinction must be enforced in code, not just prompts.

Two immediate challenges:

- Invisible hallucinations: The model sounds confident even with weak knowledge base retrieval.

- Unclear routing: When is it safe to educate vs. when to hand off to a clinician?

Why common approaches fail

Without explicit control flow and observability, you can't answer basic questions:

- Did the answer come from approved knowledge or model training data?

- What did the AI say three weeks ago and why?

- Are prompt changes causing quality drift in clinical accuracy?

- When should the model say "I don't know" vs. attempting an answer?

At that point, teams become reactive, discovering issues through patient feedback or compliance audits rather than engineering visibility. In one early test, we found a hallucination three days after the conversation through manual review. In healthcare AI, that's unacceptable.

What the workflow looked like

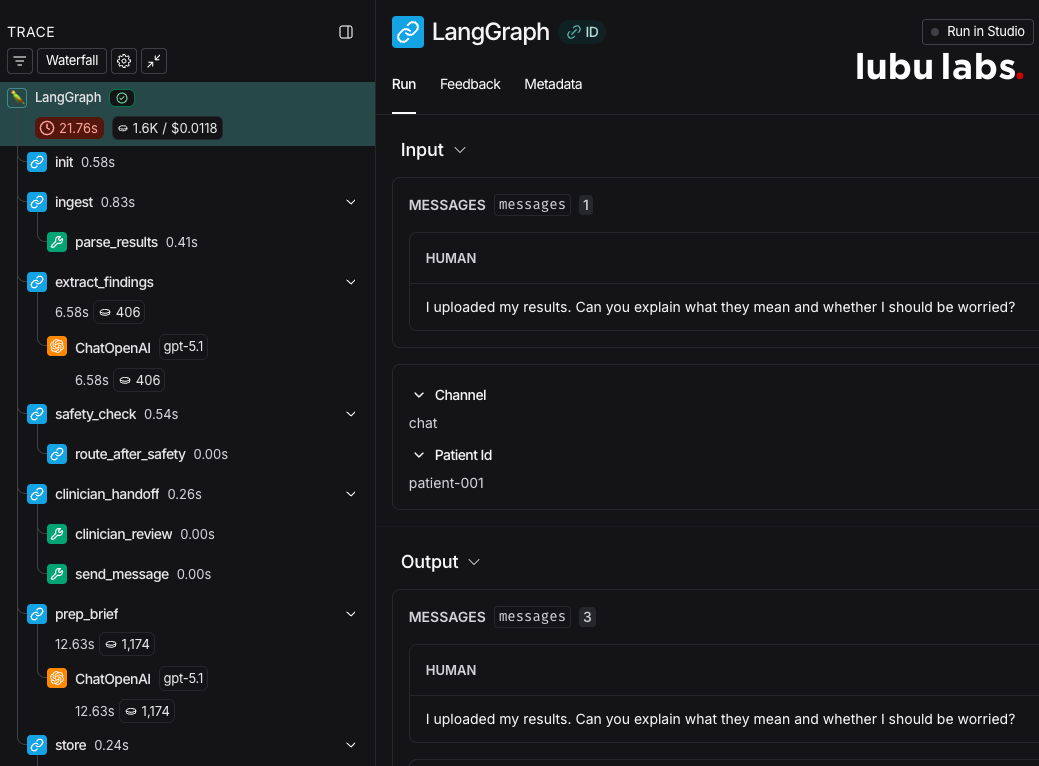

We modeled the patient education agent as a LangGraph state machine (similar to the SDR chatbot). Each stage is a node, and the conversation state carries medical findings, confidence scores, and routing decisions forward.

# imports skipped for brevity

class PatientEducationState(MessagesState):

test_results: Dict[str, Any]

confidence_score: float

requires_review: bool

thread_id: str # For checkpointing and audit trail

def should_route_to_human(state: PatientEducationState) -> str:

"""Route to clinician review if confidence too low.

This is NOT an LLM decision - it's explicit threshold-based routing

to ensure safety boundaries are enforced in code, not prompts.

"""

if state["confidence_score"] < 0.7 or state["requires_review"]:

return "human_review"

return "education_module"

graph = StateGraph(PatientEducationState)

graph.add_node("extract_findings", extract_findings)

graph.add_node("retrieve_kb", retrieve_medical_kb)

graph.add_node("education_module", deliver_education)

graph.add_node("human_review", trigger_clinician_review)

graph.add_conditional_edges("retrieve_kb", should_route_to_human)

app = graph.compile(checkpointer=PostgresSaver.from_conn_string(DB_URL))Key architectural points:

- Explicit routing based on confidence score (not an LLM decision)

- LangGraph checkpointing for session resumability (patients can leave and return)

- Human-in-the-loop as a first-class node—not a fallback, but a designed path

Real-world solutions (that worked) + advice

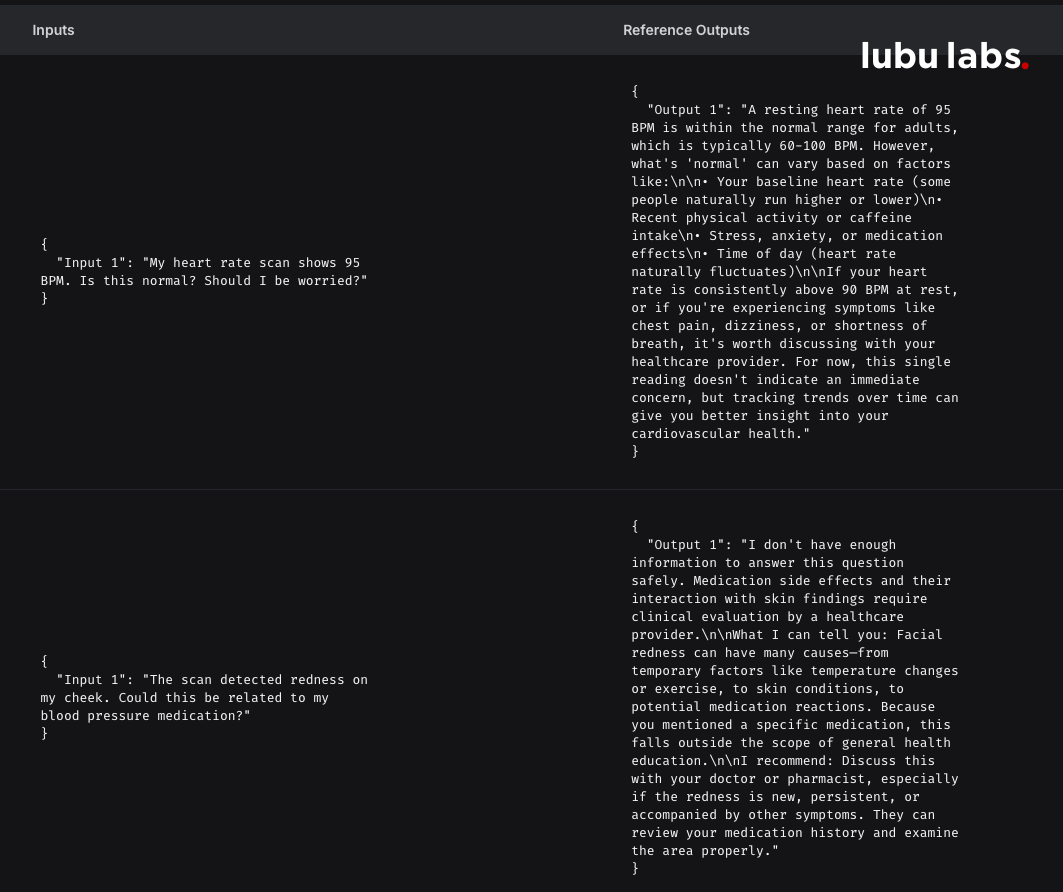

1) Medical knowledge base with confidence scoring

We used RAG over an approved, version-controlled knowledge base of clinical explanations. Every retrieval returned not just content, but a confidence score based on evidence quality.

What this looked like in practice:

- Knowledge base entries tagged by source type (peer-reviewed, clinical guidelines, patient education materials)

- Retrieval scores combined semantic similarity + source quality

- Low-confidence retrievals triggered automatic human review

Advice: Treat your medical knowledge base like production code: version control, change tracking, and rollback capability. In healthcare, "what knowledge did the AI have at that moment?" must be answerable.

2) Explicit confidence-based routing

Instead of letting the model decide when to be uncertain, we made uncertainty a state machine decision.

The pattern:

- Every answer scored on combined confidence (retrieval quality + LLM certainty)

- Threshold-based routing (< 0.7 → human review, ≥ 0.7 → proceed)

- Specific triggers for review (patient asks about symptoms, medication, diagnosis)

Advice: Don't try to make the model "smarter" at edge cases. Route them to humans explicitly. The goal is not to answer every question, but to answer every question safely.

Example metrics we observed after tuning:

- ~15% of conversations triggered human review (mostly edge cases or off-topic questions)

- Of those, ~80% were appropriately routed (validated via clinician feedback loops)

- False positive rate (unnecessary reviews) decreased 40% after threshold tuning from initial 0.8 → 0.7

- Very low rate of inappropriate clinical advice in production (validated via weekly audit sampling)

3) LangSmith for audit-grade observability

LangSmith tracing gave us an end-to-end timeline for every conversation: LLM calls, retrieval results, confidence scores, routing decisions, and metadata.

Why this mattered:

- Compliance requires immutable audit trail. What did the AI say, when, based on what? This must be answerable months later for regulatory audits.

- Debugging medical accuracy requires seeing exact retrieval + model reasoning. Without traces, debugging is guesswork.

- Continuous monitoring for hallucination patterns. We could detect when answers weren't grounded in the knowledge base.

- Checkpointing with PostgreSQL persistence allowed session resumability (patients could return to conversations hours or days later)

Advice: Tag every run with metadata you'll need for compliance: environment, patient_id (hashed), session_id, knowledge base version, model version. In healthcare, "what happened?" must be answerable months later.

Production failure modes & how we fixed them

The invisible hallucination problem

Symptom: During testing, the AI confidently explained a condition not in our knowledge base.

Root cause: When retrieval returned low-quality matches, the model filled gaps with plausible but unverified information from training data.

Why this was dangerous: In healthcare, a confident but false statement can harm patients. Unlike other applications, the stakes are immediate.

How we debugged it:

"LangSmith traces showed weak retrieval matches (low confidence), but the model response didn't reflect that uncertainty. Instead, it "bridged the gap" with training data rather than our approved knowledge."

The fix:

"Enforce grounding constraints: if retrieval confidence < 0.6, explicitly return "I don't have enough information to answer safely" and route to human review. Only pass high-confidence documents to the LLM context."

We also built a LangSmith evaluation dataset with "known unknowns"—questions we intentionally don't have answers for—to catch this pattern before deployment.

Key lesson: In healthcare, "I don't know" is a feature, not a bug. Build explicit guardrails so the model can't bridge low-confidence gaps.

The compliance gap: no audit trail

Symptom: During a test audit, we couldn't reconstruct what the AI told a patient three weeks earlier.

Root cause: Early logging captured final messages but not the full reasoning chain (what was retrieved, routing decisions, confidence scores).

Why this was a blocker: Healthcare regulations require immutable audit trails. "The AI said X" isn't enough. You need: "The AI said X because it retrieved Y with confidence Z at timestamp T."

The fix:

- Comprehensive LangSmith metadata tagging (session_id, kb_version, model_version)

- Structured trace export for compliance teams

- Checkpointing with PostgreSQL persistence for session continuity

- PII redaction at trace level (redact for monitoring, keep full for audit export)

Key lesson: Observability in healthcare isn't just for debugging. It's for liability protection. Build it from day one.

Takeaway

In healthcare AI, observability and explicit control flow are not optional—they're liability protection. The value of LangSmith wasn't improving model intelligence. It was creating visibility into system behavior and audit trails that satisfy regulatory requirements.

Here's what mattered most in shipping to production:

- Medical grounding is mandatory. Use RAG over approved knowledge bases with confidence scoring. Don't let the model bridge gaps with training data.

- Uncertainty is a feature. Build explicit confidence thresholds and route low-confidence cases to humans. The goal is safe answers, not all answers.

- Audit trail = liability protection. Tag every LangSmith trace with compliance metadata. You need to reconstruct "what happened and why" months later.

- Make boundaries code, not prompts. Use explicit routing logic—don't rely on "the model will understand."

If you do only one thing: make clinical accuracy measurable (via LangSmith evaluations), then monitor it continuously (via production traces).

Sources & Further Reading

Official Documentation:

Healthcare AI Resources:

Ready to Transform Your Business?

Let's discuss how Lubu Labs can help you leverage AI to drive growth and efficiency.