AI Framework vs. Custom Stack: What Actually Works in Production?

A pragmatic guide to choosing between AI frameworks versus building custom orchestration for production-ready AI systems.

Many enterprises get stuck in the AI proof-of-concept phase with impressive demos that never become stable production systems. In fact, industry surveys show that nearly 80% of AI projects fail, and Gartner reports that, on average, only ~48% of AI projects make it into production.

"Of the 200 AI deployments I’ve led, only 48 made it into production and are still in use today." - part of Gartner's survey

The gap between AI’s promise and real-world impact is still a major problem as we head into 2026.

The purpose of this blog

There are plenty of beginner guides on the internet, but very few of them cover what it takes to succeed with AI in production. Topics that actually matter once real users show up but are often ignored in beginner guides:

- failure modes

- cost and latency trade-offs

- framework choice and migration pain

- regulated domains and compliance constraints

- real-world data challenges

- evaluation

- observability

- finding the right partners / employees

Across my posts, I will share more advanced concepts of AI engineering drawn from real-world experience that I’ve learned - without rehashing the basics.

In conversations with CTOs and Heads of AI, one theme kept coming up: the hardest work starts after the PoC.

This first post focuses on an early decision that often determines how painful that journey becomes: When to use an AI framework vs. when to build a custom stack - and how to choose pragmatically.

Frameworks vs. custom stacks: choosing pragmatically

When developing AI applications, teams often face a choice:

- Use an AI framework (move fast, borrow patterns), or

- Build custom orchestration (own the control-plane).

"Frameworks are tools, not goals." — Engineering Lead at major e-commerce platform

Rather than chasing hype, the teams that ship tend to pick the stack that fits their use case, constraints, and team maturity.

The regret tax

I’ve seen teams where, midway through development, someone says: “Damn, we should have chosen a different approach.”

Switching core tech late is painful - I learned this firsthand while building a chatbot for a major e-commerce platform. We picked a framework early on, later realized another would have been a better fit, and by then the migration cost (rewiring chains, callbacks, memory etc.) was simply too high.

The ecosystem (and what each tool is actually good at)

To reduce regret, it helps to understand the main frameworks and their “sweet spots” — plus their popularity signals.

Below are some of the most popular AI frameworks, a short production-oriented take on each, and their recent PyPI/NPM download counts (directional — downloads are not production usage).

-

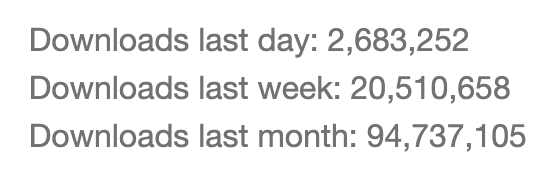

LangChain – widely-used Python/JS library for chaining LLM prompts, tools, and memory. It has a huge ecosystem of integrations (APIs, databases, etc.).

- What it’s good at: prototyping conversational agents and RAG pipelines quickly.

- Why it wins: beginner-friendliness and speed to first demo.

- Reality check: you can write your own “LangChain” in clean Python, but the prebuilt patterns lower the barrier for teams who haven’t built agents before.

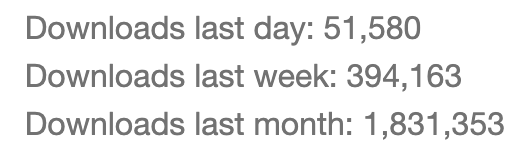

- Signal: ~94.7M downloads last month (as of 05.01.2026).

-

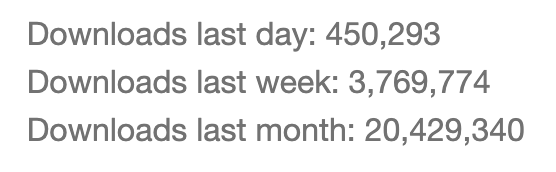

LangGraph – a newer framework that brings graph-based explicit control-flow (think DAGs) to LLM agents. LangGraph treats an AI workflow as a stateful, versioned, observable directed acyclic graph, enabling features like branching, looping, and retries with built-in logging.

- What it’s good at: branching, looping, retries, checkpointing, and making state explicit.

- Why it wins: when “agent as a loop” stops being cute and starts being a reliability problem.

- In one production setup, LangGraph was the GOAT for enterprise-grade multi-step and multi-agent workflows because it forces you to make control flow explicit.

- Signal: ~20.4M downloads last month.

-

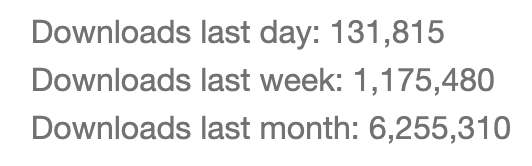

LlamaIndex – an open-source library focused on connecting LLMs with external data. It's a document-heavy RAG with a clear center of gravity. In short, LlamaIndex is great for building knowledge assistants that ground answers on your enterprise data.

- What it’s good at: building RAG pipelines by indexing documents and retrieving relevant context at query time.

- Why it wins: if you’re building knowledge assistants or anything document-heavy, it’s often the most direct path.

- Personal note: I like that on their website they claim “agentic OCR and document-specific” instead of trying to be an “agent one-stop shop.”

- Signal: ~6.26M downloads last month.

-

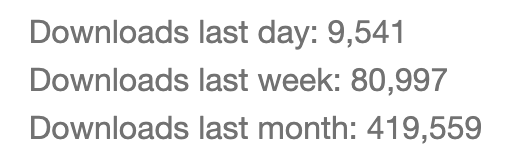

Haystack – an open-source framework by deepset for building NLP search and question-answering systems. It has great debugging/monitoring tools, giving you visibility into each step of a QA or RAG pipeline.

- What it’s good at: modular pipelines for retrieval + generation with strong visibility into each step.

- Why it wins: clear building blocks for retrieval + generation.

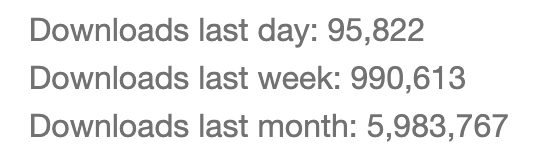

- Signal: ~420K downloads last month.

-

Semantic Kernel – is a lightweight SDK from Microsoft for creating AI applications (supports C#, Python). Is enterprise-friendly if you live in Microsoft land.

- What it’s good at: integrating Azure OpenAI + adding memory, planning, and sequencing to prompts.

- Why it wins: if you’re already deep in Azure/Microsoft tooling and want agentic capabilities without adopting a new “framework universe.”

- Signal: ~1.83M downloads last month.

-

PydanticAI – Python agent framework aimed at making LLM apps more robust and type-safe. Is schema-first when contracts matter more than “agent magic”. PydanticAI uses Pydantic models to validate and structure LLM outputs, ensuring responses conform to expected schemas. In essence, it brings the strictness of FastAPI-style data validation to generative AI.

- What it’s good at: validating and structuring LLM outputs into predictable Python objects.

- Why it wins: when you care more about contracts (JSON schemas, typed outputs, predictable tool inputs) than fancy orchestration.

- Signal: ~6M downloads last month.

-

CrewAI – an open-source Python framework for orchestrating multiple AI agents that collaborate on tasks. CrewAI is designed for building multi-agent systems (a “crew” of agents) that can communicate and work together to solve complex problems.

- What it’s good at: role-specialized agents coordinating on complex tasks.

- Signal: ~2.57M downloads last month.

-

Mastra – TypeScript-first framework for building AI agents and workflows with a modern web stack. Mastra enables you to define agent workflows, memory, tools, and RAG pipelines all in TypeScript, with an interactive dev server and built-in observability. The goal is to let JavaScript/TypeScript developers quickly go from idea to production with AI agents, offering templates and an open-source runtime that you can self-host.

- What it’s good at: JS/TS-native agent workflows, tools, memory, and RAG patterns.

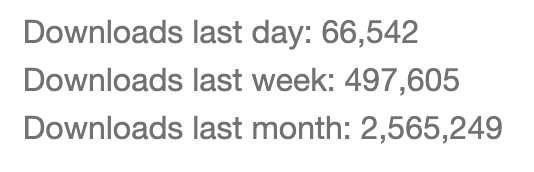

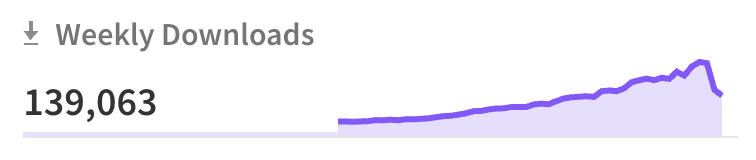

- Signal: npm shows ~140K weekly downloads for

@mastra/core.

A common selection pattern based on downloads

- LangChain for fast iteration and onboarding teams into the space (especially when they haven’t built agents before).

- LangGraph when you need tighter control, explicit control-flow, and reliability for complex/multi-step systems.

- LlamaIndex when the center of gravity is documents + retrieval (RAG copilots, document workflows).

Caveat: download numbers are useful as a gravity metric, but they don’t tell you how many teams are actually running these at scale in production. Use them as a signal, not a decision rule.

Where frameworks shine (and where they don’t)

For quick prototypes or internal tools, high-level frameworks can dramatically speed up development where you can compose prompts, tools, memory, and logging in a few lines.

“We mostly use LangChain, LangGraph, or PydanticAI, plus custom Python for our projects,” one Head of AI told me, “and we try to use community-supported tools like Langfuse for monitoring.”

In practice, off-the-shelf frameworks + add-ons cover a lot of ground and help teams ship an MVP fast. LangChain’s ecosystem in particular makes it a common starting point.

The hidden bill: production complexity

But frameworks don’t remove production complexity - they mostly postpone it. The default “agent loop” style is flexible, but as workflows grow, control flow can become harder to reason about:

- retries

- tool failures

- partial results

- edge cases piling up

At that point, teams usually add guardrails (timeouts, max steps, budgets), stronger error-handling, and better tracing or they “graduate” to a more structured orchestration model.

Why newer orchestration approaches gained traction

That’s why newer orchestration approaches have gained traction.

LangGraph was created to introduce explicit control flow, resiliency, and observability for long-running agents - treating the pipeline more like a real workflow with branching, looping, retries, and durable state/checkpointing.

This kind of determinism makes systems easier to debug:

- see which node failed

- reproduce runs by ID

- test workflow logic like you would any other state machine

Similarly:

- schema-first frameworks like PydanticAI reduce chaos by validating structured outputs

- tools like Langfuse or LangSmith add tracing + evaluation so you can measure quality on real traffic, not just vibes

When a custom stack is the right call

Still, for mission-critical or heavily regulated systems, a custom stack can be the right call. In healthcare or finance, some teams intentionally trade speed for control:

- strict data boundaries

- self-hosting

- tight latency budgets

- compliance-driven architecture

For example, one healthcare AI team we spoke with “doesn’t rely on frameworks like LangChain or AutoGen. Our stack runs on Azure services, and we build the relevant orchestration ourselves”, according to their Head of AI.

This choice was driven by the sensitive nature of their data - they need full control over how data flows and how models behave. They even self-host LLMs in a secured environment rather than sending data to third-party APIs.

The pragmatic middle

On the other hand, not every project needs heavy engineering. The pragmatic approach I hear most often is:

"Use the highest-level tool that gets the job done without compromising critical requirements — and go custom only where you truly need it."

Many teams start with LangChain for quick wins, adopt LangGraph when complexity and reliability demands rise, and then add custom pieces for the most latency- or compliance-sensitive parts. As one great AI startup founder neatly summarized:

"LLMs may be probabilistic, but your architecture shouldn’t be. Choose your tools based on product complexity, risk tolerance, and team capabilities — not on what’s trending."

If you asked me personally: I’d chose LangGraph or PydanticAI for orchestration and LlamaIndex for document-heavy / complex RAG pipelines - and then I’d immediately ask the more important question:

How do we trace and observe what the agent actually did in production?

That’s the topic I’ll cover next.

Conclusion

Bridging the gap from prototype to production with AI takes technical depth and strategic pragmatism. The numbers in the introduction are a reminder that demos don’t equal deployments.

I’ve seen teams where the PoC looked “done”, but the real work started the day real users arrived:

- edge cases

- tool failures

- shifting requirements

- wrongly predicted user interactions

Frameworks vs. custom stacks is just one decision on that path. The real goal is simple: ship reliable value.

Start with the highest-level tooling that helps you move fast, then add structure (control flow, guardrails) and invest in observability as complexity grows.

In the next post, I’ll cover the question that usually matters more than the framework choice: how to trace, evaluate, and monitor agent behavior in production so quality, latency, and cost don’t drift over time.

Thanks for reading.

Sources:

Ready to Transform Your Business?

Let's discuss how Lubu Labs can help you leverage AI to drive growth and efficiency.